Q1 2024

The rise of global online gaming has attracted the interest of threat actors, who focus on monetary transactions as a prime target for their attacks. These cybercriminals operate efficiently because the effort-to-attack ratio drives wages and impacts their overall wealth creation. As a result, online gaming platforms now face persistent threats from both malicious bots and human-based attacks. Fraud should no longer be considered an acceptable cost of doing business.

This industry brief utilizes data from our comprehensive bot abuse analysis, focusing on the top attack vectors in online gaming during Q1, Q2, and Q3 2023. It seeks to provide data-driven insights into attacks on the gaming sector, offering effective detection and prevention strategies. Insights are drawn from the Arkose Labs Global Intelligence Network, which includes major corporations and category leaders. These entities, prime targets for cyber threats, provide a unique perspective for monitoring and analyzing activities.

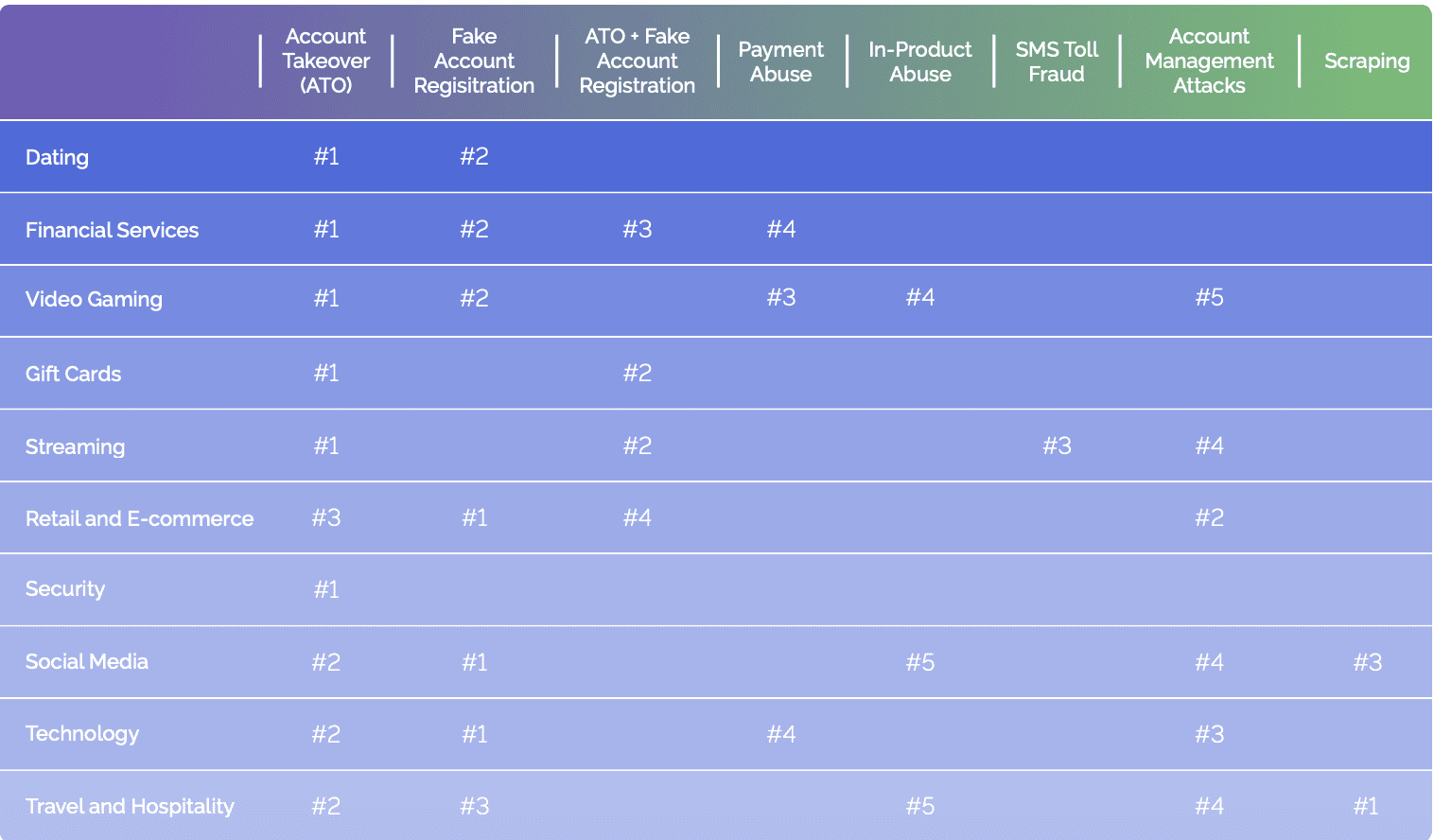

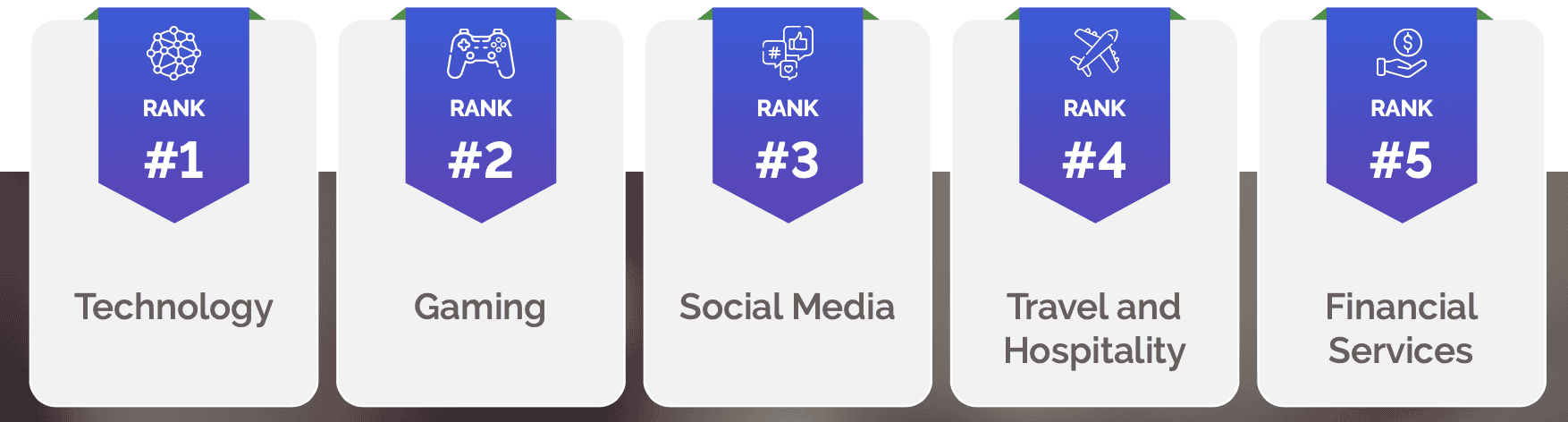

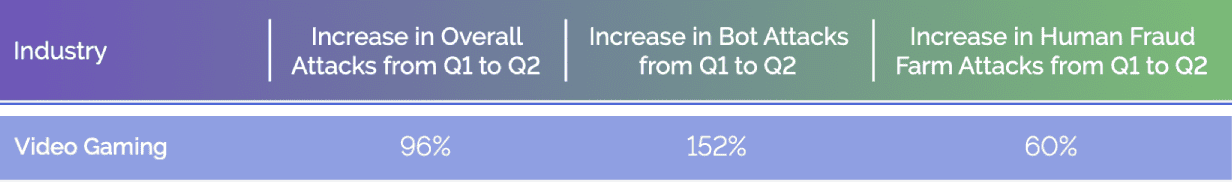

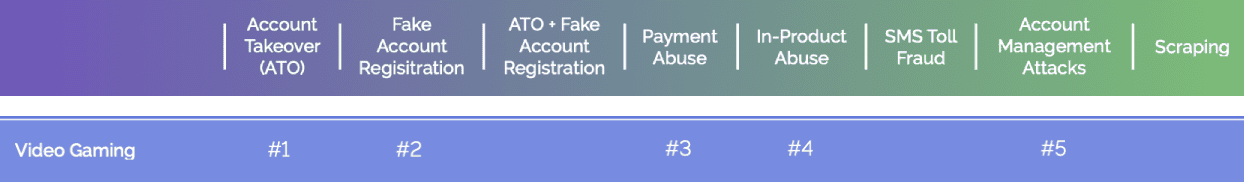

Attack type by industry in H1 2023:

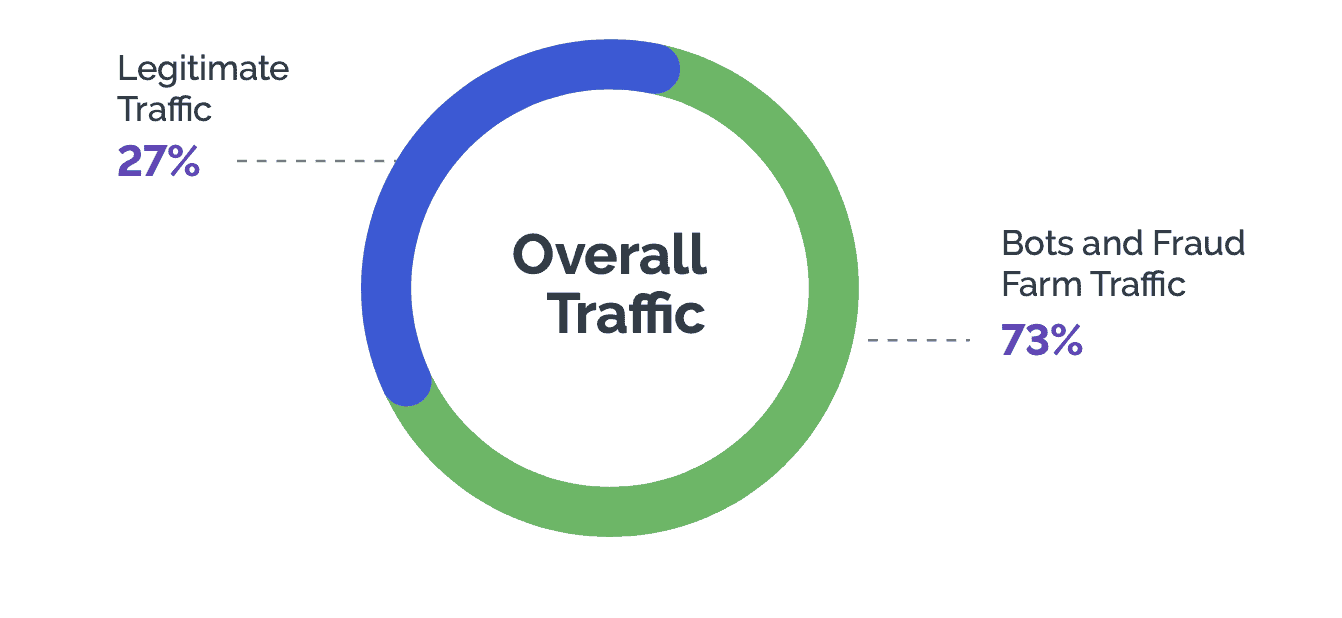

We analyzed billions of sessions worldwide across industries, between January 2023 and September 2023, and assessed three primary attack vectors fraudsters use to launch various cyberattacks. In sum, these methods generated billions of attacks in the first half of 2023 and into Q3, comprising 73% of website and app traffic measured. That means almost ¾ of web traffic to digital properties is malicious.

In the virtual gaming sector, criminals dedicate substantial time and resources to activities such as account takeovers, identity theft, credential stuffing, and other fraudulent endeavors. But when faced with robust site protection, bad actors can no longer achieve the economic gains they seek and ultimately move on. This principle underlies the core philosophy of Arkose Labs—making attacks too costly for adversaries to persist.

The Bad Side of Bots

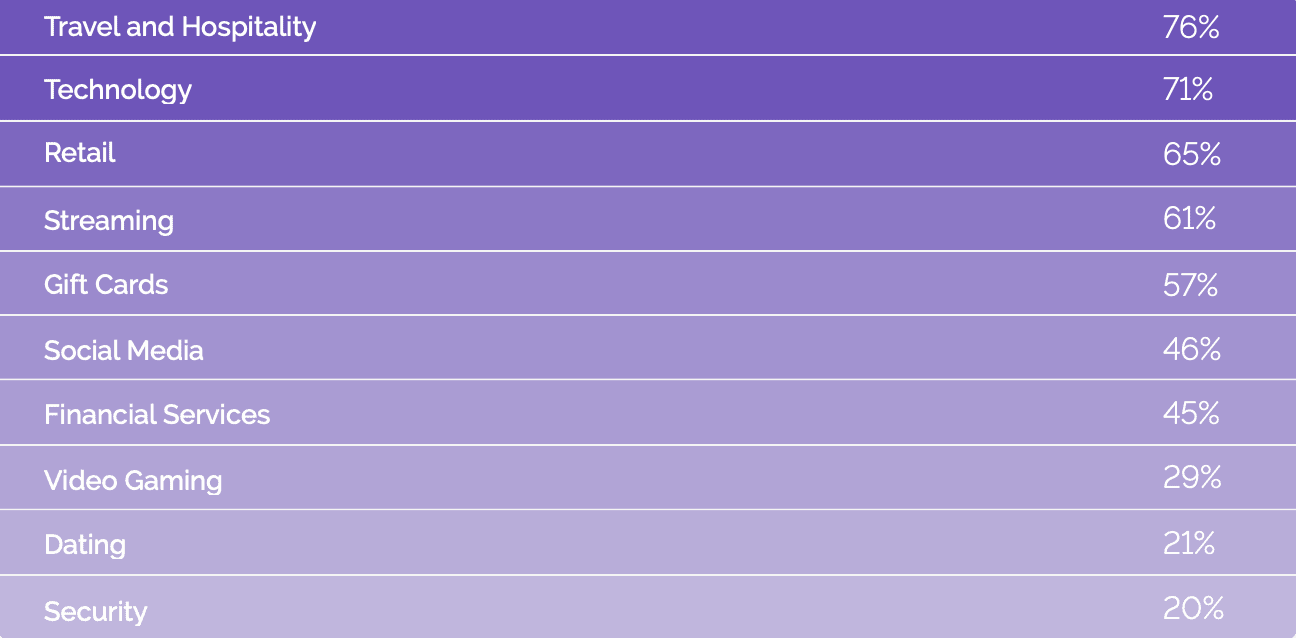

Efficiency is a top priority for cybercriminals, as it directly influences their monetary gains. Malicious bots are key to empowering fraudsters in carrying out targeted and well-crafted attacks on gaming enterprises. Notably, 29% of web traffic in online gaming is attributed to bad bots.

The percentage of traffic by industry that comes from bad bots:

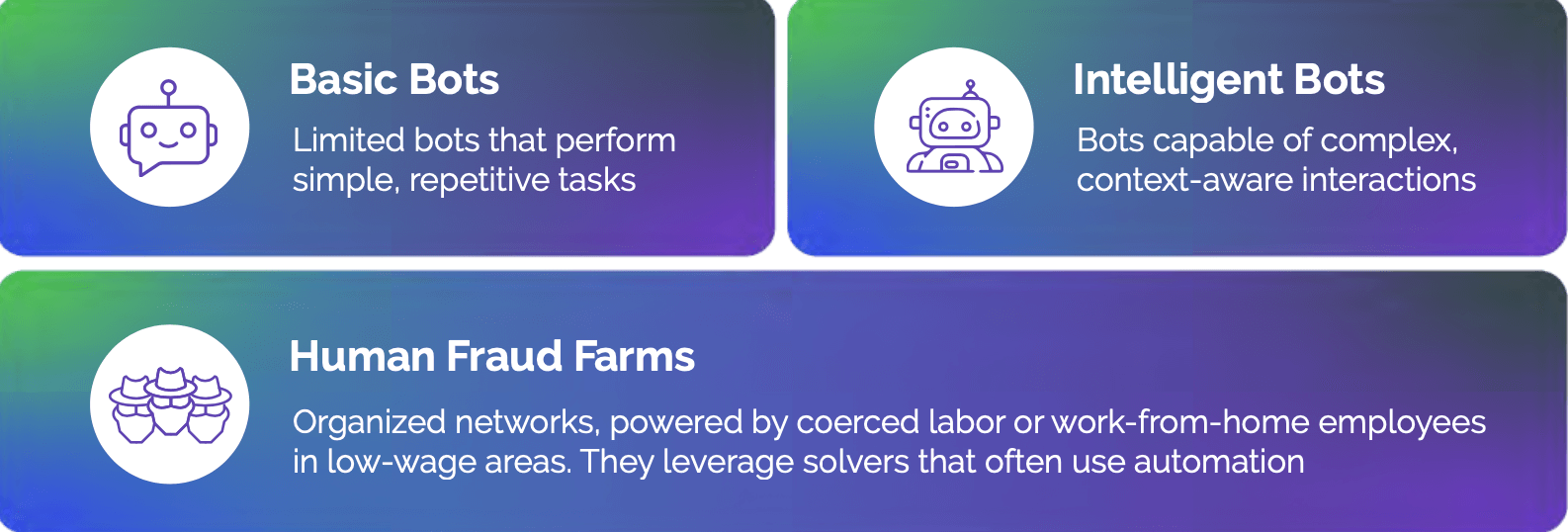

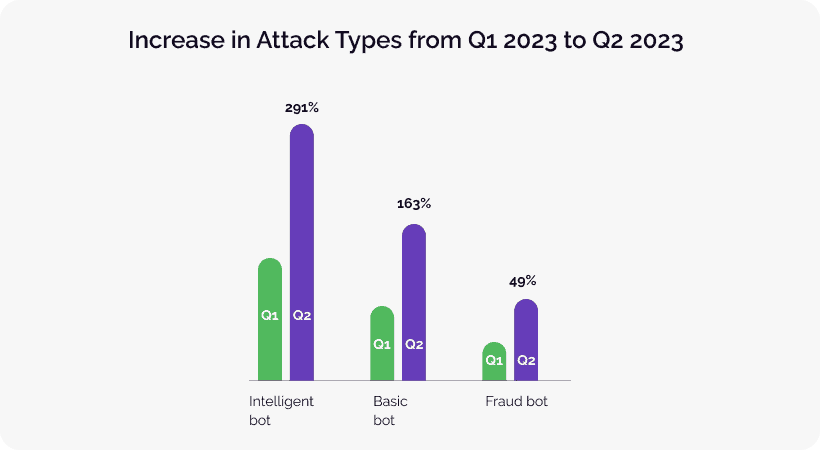

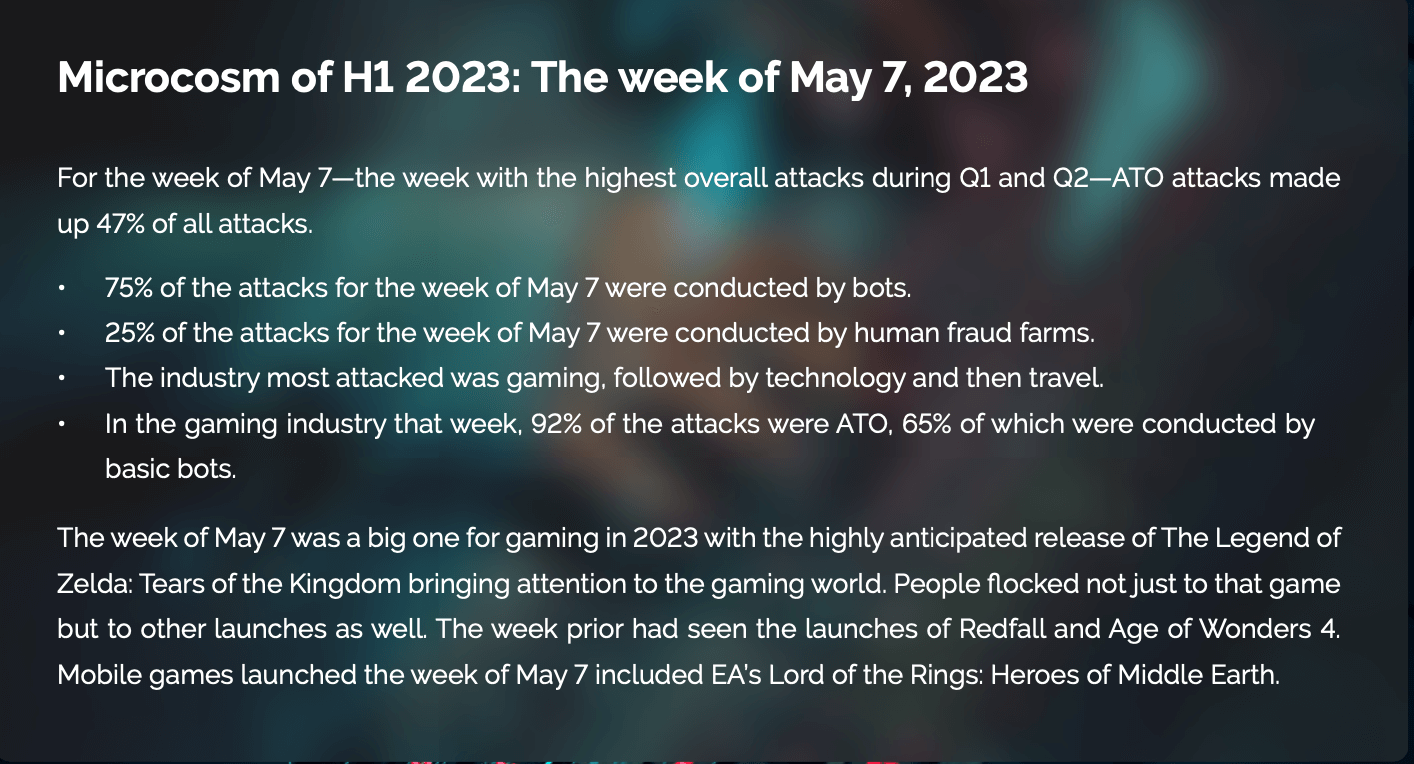

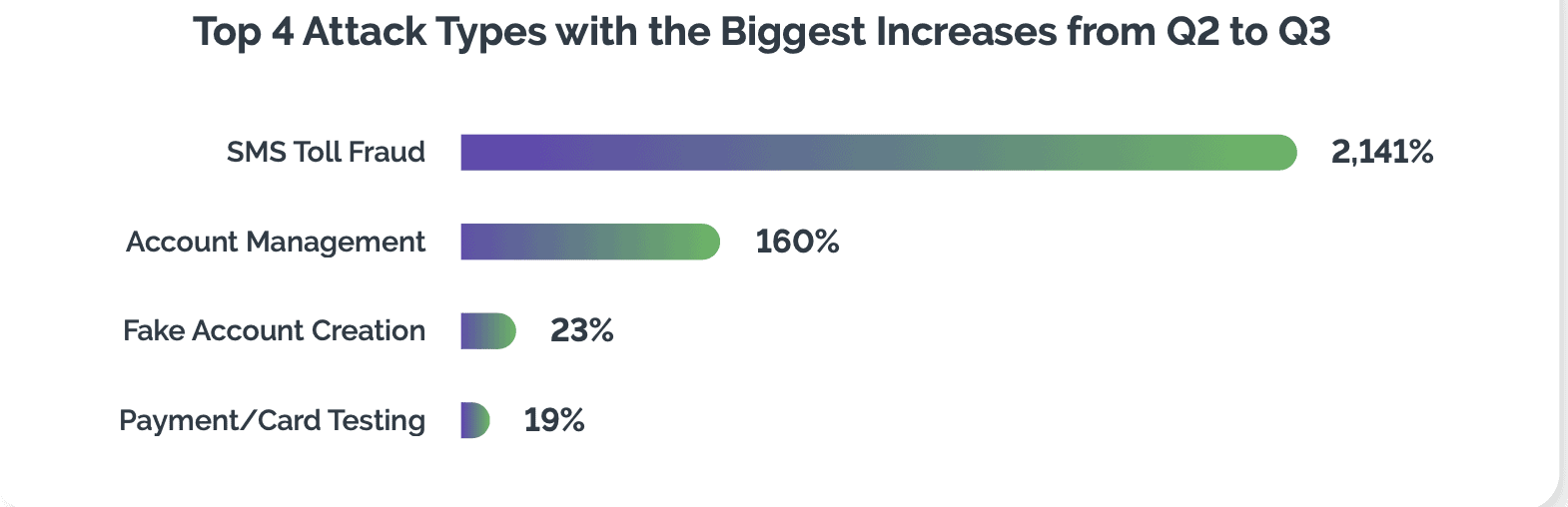

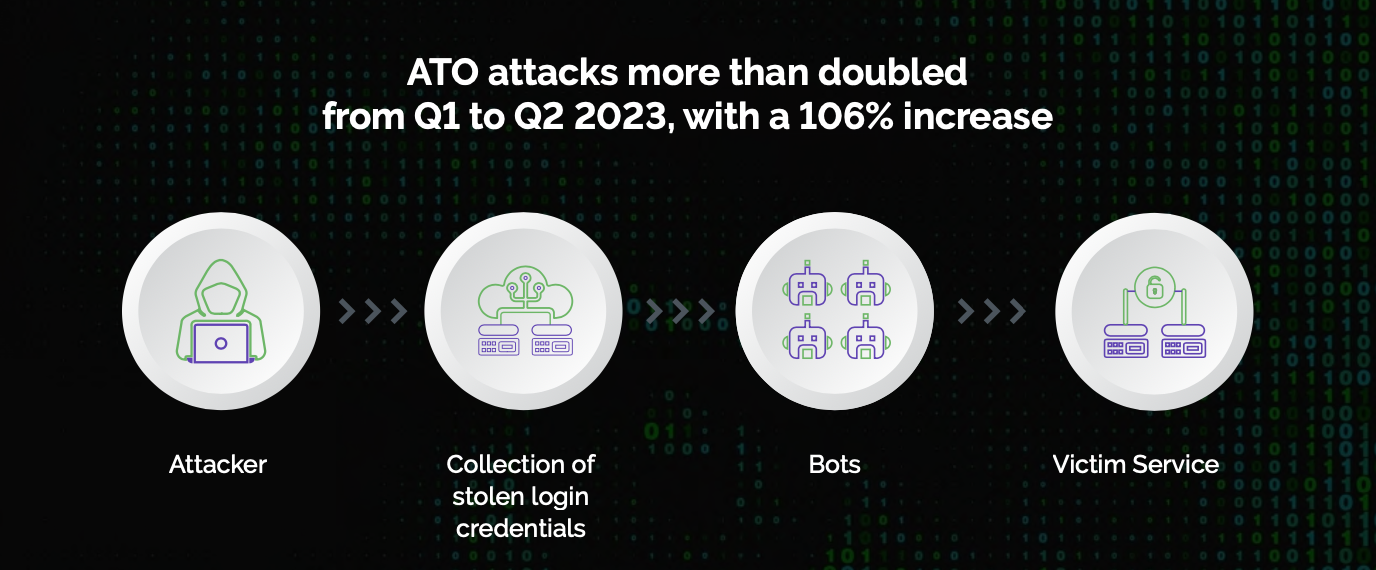

Between Q1 2023 and Q2 2023, intelligent bot traffic experienced a nearly fourfold increase. This growth surpassed basic bots and played a pivotal role in the overall surge of approximately 167% in bot attacks during the same period.

In virtual gaming, malicious bots try to gain unauthorized access to user accounts, misuse content and virtual currencies, and engage in other fraud. With a 121% increase in total attacks in Q2 over Q1 2023, bad bots are now a primary concern in this industry.

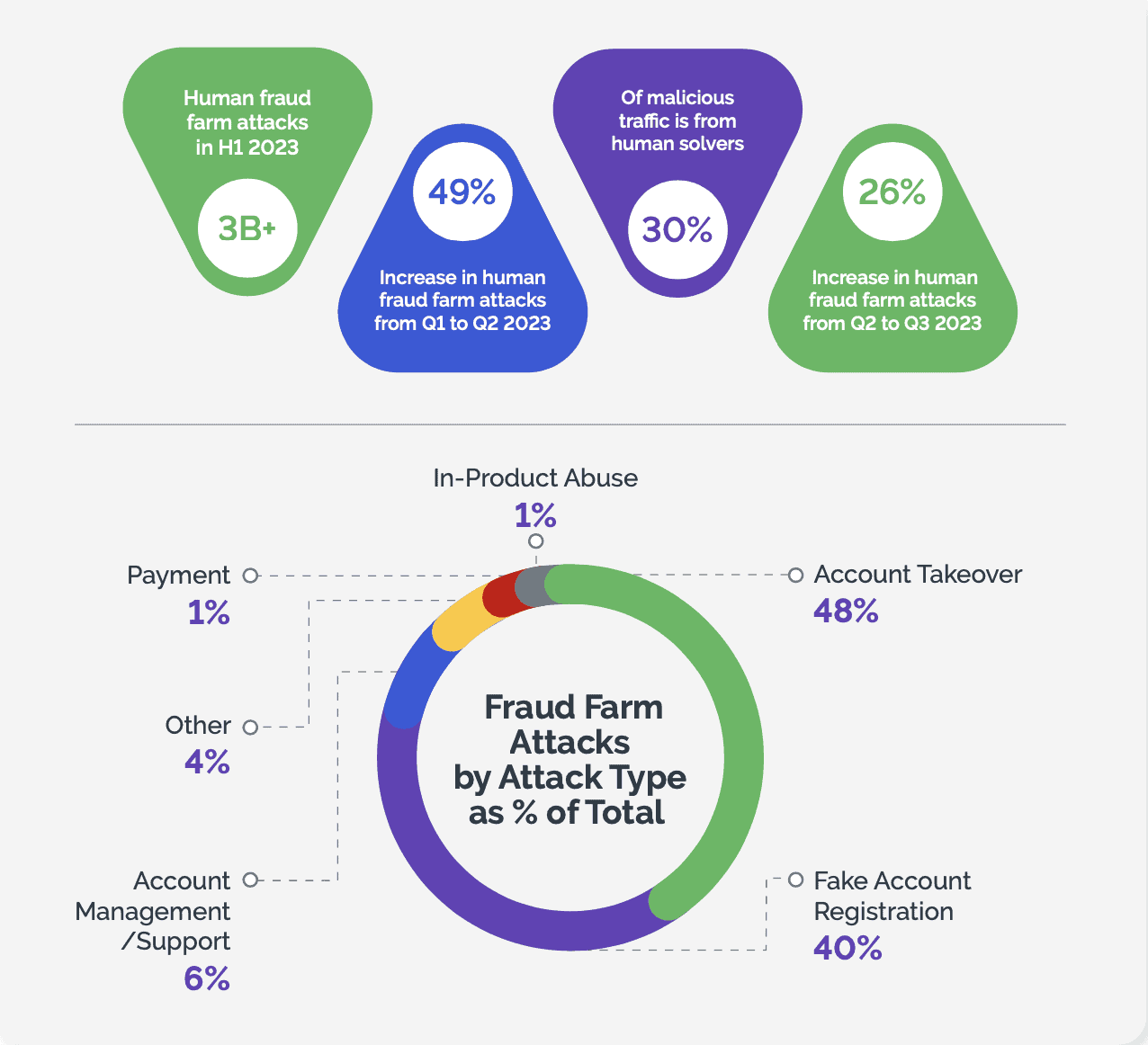

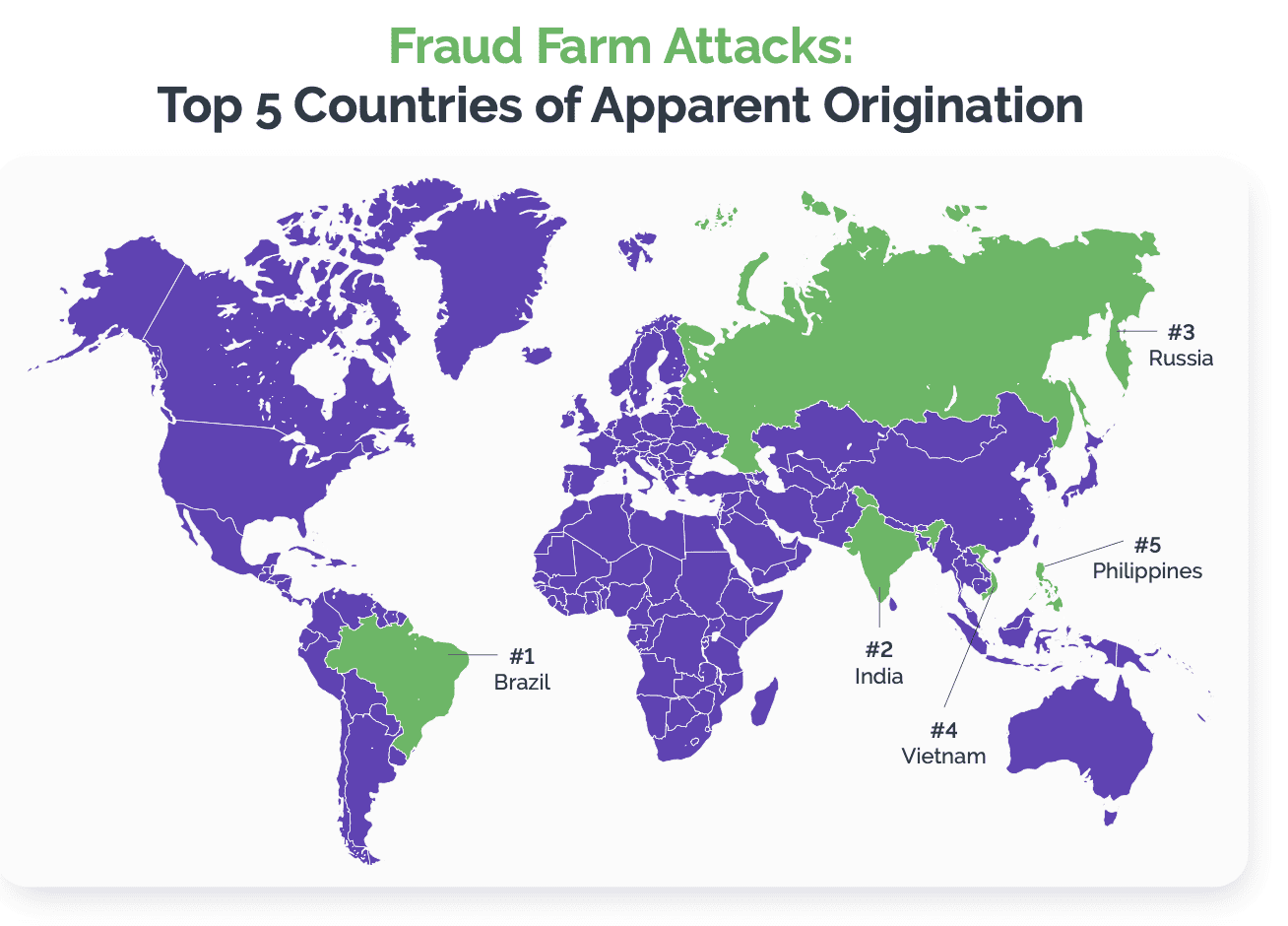

While the prevalence of automated threats is a concern, there has also been a marked 26% uptick in human-based attacks during Q3. When malicious bots fail to make it past security defenses, threat actors turn to human fraud farms to complete their mission. This move comes at a significant human cost, often involving forced labor.

Although the issue of bots is significant, accounting for 29% of video gaming traffic, human-based attacks have seen a notable increase of 26% in Q3 compared to the preceding quarter. Due to the financial incentives associated with these attacks, and the volume of sensitive data at risk, gaming businesses are now deemed high-value targets.

Industry Benchmarks

*Early analysis of Q3 data indicates that technology, gaming, social media, and financial services retained their rankings.

Beating these adversaries demands technology that dynamically targets human solvers and applies adaptive, time-consuming challenges. With this capability in place, global gaming organizations can defeat the economics behind attacks that exploit human labor at scale.

Concerning Trends and Crimes in Online Gaming

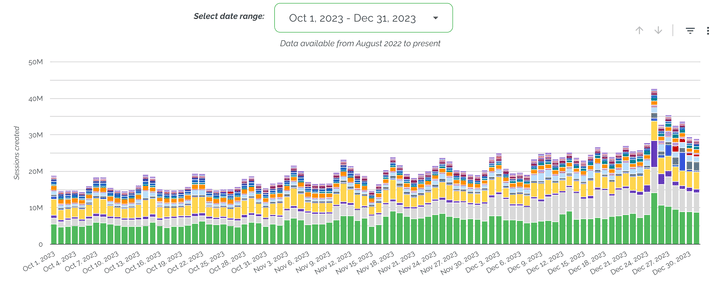

During Q4 2023, our threat intelligence identified a steady rise in overall traffic for gaming customers, reaching its peak on Christmas Day, the day of gift unboxing, with more than twice the baseline traffic for the quarter.

Concurrently, there was an almost tenfold increase in the number of attacks. These fraudulent activities, primarily centered around fraudulent account registrations, lay the groundwork for post-holiday schemes like return and refund fraud.

The impact of fake account creation is resulting in the loss of millions. Bad actors employ cunning strategies, from manipulating the in-game economy by seizing and selling items at premium rates to leveling up account profiles for resale. They even engage in in-game botting to accumulate virtual currency through automated participation in thousands of matches.

While gaming companies faced a substantial 69% surge in fake accounts during Q2 compared to Q1, this onslaught appears to have stabilized, with only a marginal 1% increase in Q3 over Q2.

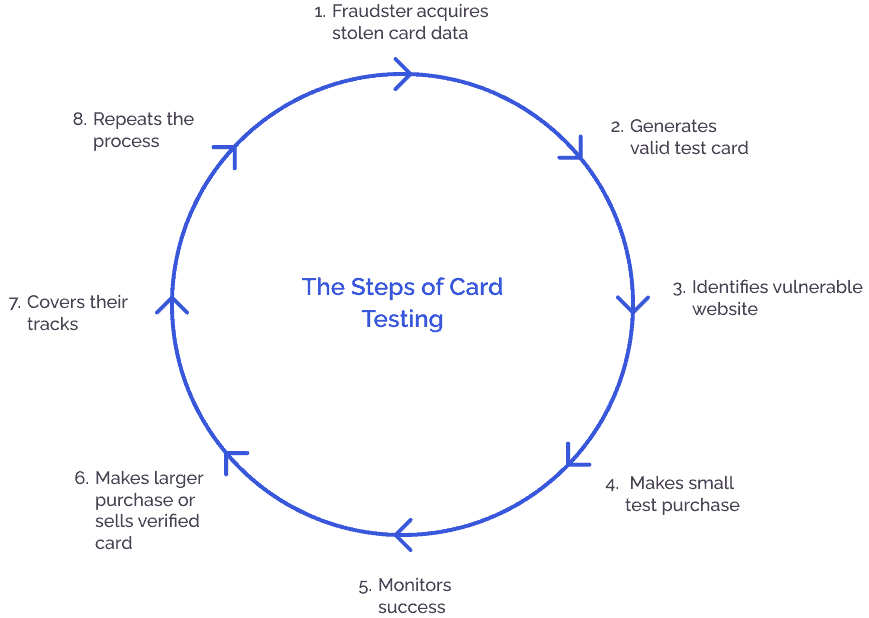

Beyond fake accounts, another gaming threat looms in the form of payment attacks, encompassing activities like card testing. Fraudsters often initiate small transactions to test the validity of stolen credit card details without triggering immediate suspicion from cardholders or financial institutions. The goal is to avoid detection and maintain a low profile, allowing fraudsters to confirm the viability of the stolen information before conducting more serious transactions.

From Q1 to Q2, payment attacks, which include card testing, were up 30% across all industries. These attacks have shown a persistent upward trend in gaming, escalating by 29% in Q2 over Q1 and a further 15% in Q3 over Q2.

However, a surprising twist emerges with account management attacks, catching gaming companies off guard. Instances of fraudsters attempting to change passwords and manipulate administrative tasks skyrocketed by 313% in Q2 over the first quarter, followed by an additional 61% surge in Q3 over Q2. The landscape of online gaming security is evolving, and vigilance is more crucial than ever.

Two Bot Threats in Online Gaming

The surge in bot and human fraud farm attacks is driven by two technology trends influenced by powerful economic forces:

1. Generative AI (GenAI)

Our threat research has documented a significant uptick in the last year, and especially in the past six months, of GenAI being used to craft convincing phishing attacks within the gaming sector. Fraudsters use AI-generated content to mimic legitimate gaming communications, tricking users into revealing information such as login credentials or payment details. This sophistication makes it challenging for players and gaming companies to distinguish between authentic and bogus communications.

Cybercriminals are also employing AI-powered bots to execute account takeover (ATO) attacks, a predominant threat across various industries, gaming included. Similar to phishing, this technology allows fraudsters to copy human behavior, so they can interact with gaming platforms in a way that resembles a real user.

For example, these bots can simulate realistic patterns of gameplay, chat interactions, and even social exchanges within gaming communities, making it hard for traditional security to distinguish between the two. Of particular concern is the impact of GenAI on security, as it enables the creation of highly adaptive bots that pose challenges to traditional measures like CAPTCHAs.

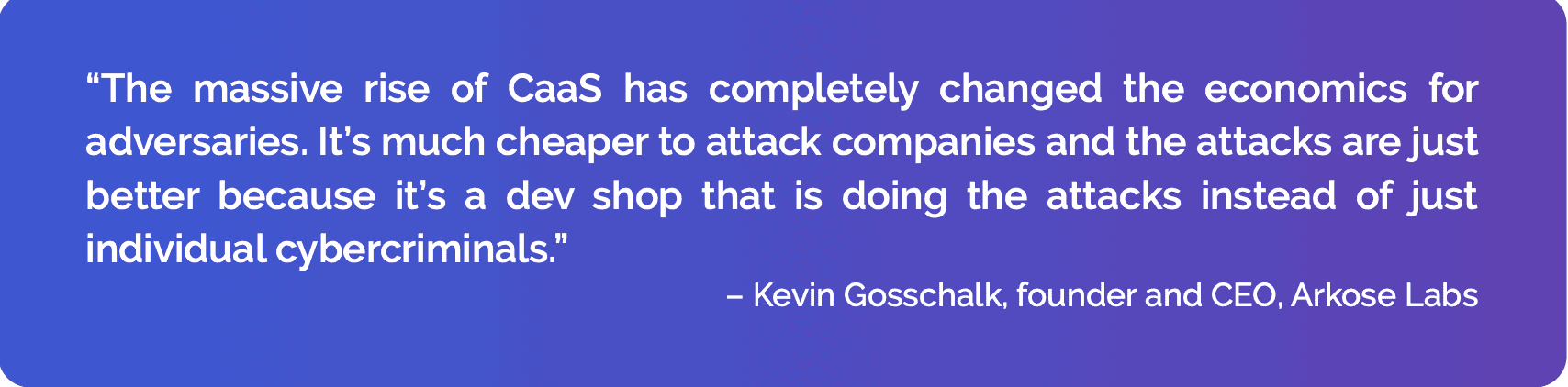

Cybercrime-as-a-Service (CaaS)

The CaaS model drives a surge in attacks, causing trillions in damages. In the online gaming sector, it empowers fraudsters with easy access to advanced tools tailored for gaming-related attacks, lowering entry barriers and broadening access to online crime.

These platforms create a marketplace for diverse hacking tools, enabling compromises of game servers, manipulation of in-game currencies, cheating, and contributing to unfair gaming. Repercussions include ATO incidents and identity theft, impacting players and gaming platforms with financial losses and reputational damage.

One example of CaaS impacting online gaming involves the availability of AI-driven cheating tools offered “as-a-service.” These cheating services, often provided through illicit online marketplaces operating as CaaS platforms, enable users to employ advanced aimbots, wallhacks, and other game-enhancing tools with unprecedented precision and effectiveness.

For instance, an AI-driven aimbot could dynamically adjust its targeting based on the opponent's movements, making it exceptionally challenging for legitimate players to compete fairly. CaaS services are not only readily accessible but are continuing to adapt to gaming countermeasures.

The Growing Scourge of Attacks

Attack Type Breakdown by Industry in H1 2023

Arkose Labs Can Help

Arkose Labs safeguards businesses by disrupting the financial incentives driving bot attacks. Our long-term bot mitigation and account security solutions focus on protecting critical user touch-points: account login and registration. By identifying hidden attack signals and undermining attackers' return on investment, we enhance security without compromising user experience.

Our unique platform, Arkose Bot Manager, analyzes user session data to assess context, behavior, and reputation, classifying traffic based on risk profiles. Suspicious traffic faces enforcement challenges, distinguishing between legitimate users and fraudsters to block automated activities and ensure a secure gaming consumer experience.

Arkose Labs for Gaming

Book a Meeting

Meet with a fraud and account security expert