It might sound odd that a cybersecurity company would hire a team of artists, but who else is able to craft images that are both aesthetically pleasing and easy to understand while hitting difficult technical specs? We've gone through many generations of challenge design, creating new ones only to find out a few months later that attackers had automated it. One of our main roles is testing our challenges with machine learning or breaking them using conventional computer vision algorithms. Many designs I thought would be strong were actually weak, and many weak designs I discounted ended up lasting much longer than I thought. Through experience, my team and I have been incredibly successful, and we can evaluate CAPTCHA designs and estimate the difficulty an attacker would have with a high level of confidence.

As such, we've seen a worrying rise in the number of CAPTCHAs that have massive claims of efficacy from their makers but which, on closer examination, are actually very weak against automation. Furthermore, it appears that the groups creating them are not even attempting to make a functional CAPTCHA design, and are instead relying on questionable detection techniques to provide the majority of their defense. I’m writing this blog to share with you my insights, to start a dialogue, and to help raise your awareness of a weakness that may be in the fortress you’re building to protect your company’s greatest asset – the online environment for your consumers.

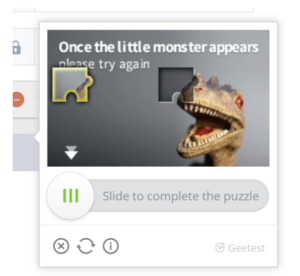

Of note are the “tick this box", the “press and hold” button, and the puzzle-piece CAPTCHA.

Read on to learn how attackers easily bypass them!

What makes an effective CAPTCHA?

A functional CAPTCHA, requires a challenge that is significantly more difficult for attackers than it is for legitimate users to get through. You can always design some sort of interface that requires something special from a programmer to get past it.

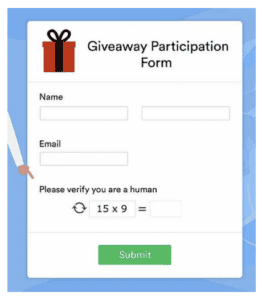

An example would be something like this math CAPTCHA, that asks the users to do some simple math problem.

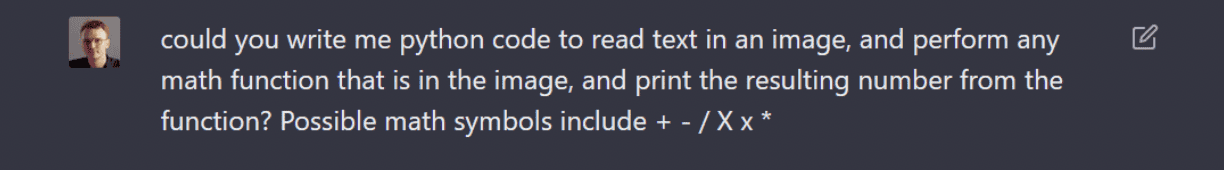

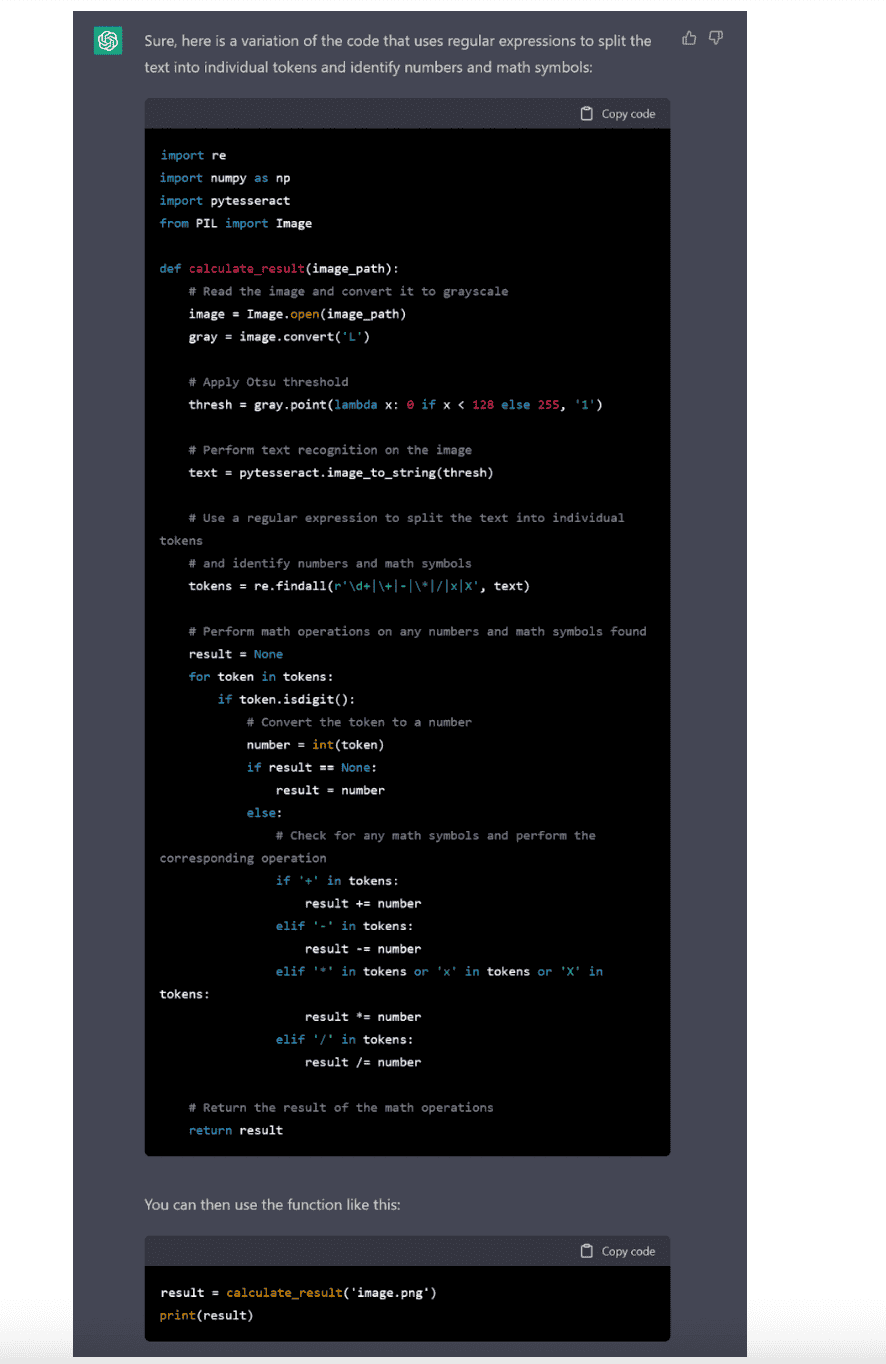

While an attacker would need to use an algorithm to solve the math equation, it’s a trivial amount of work that took me all of 5 minutes using ChatGPT to solve:

This means that a functional CAPTCHA must be complex enough that modern machine learning tools are not able to be rolled out and defeat it. Obviously, this math CAPTCHA is a bad example of something that is secure, but many companies have attempted to put out static challenges that are not much more difficult to defeat with these tools.

Instead, you need a format that allows the security team to constantly update and change the challenge so that attackers are following a moving target and are constantly losing money as they attempt and fail to keep up. But something that worries me is that we’ve seen many claims in the field that these sorts of CAPTCHAs can exist just to capture data to then enforce with other systems, and not worry about providing actual defenses.

Behavioral biometrics is data hungry

Biometric analysis is a popular way to try to spot bot attacks. This method looks for unusual behavior that could be a sign of an attack.

But this technique needs copious amounts of data, and simple static CAPTCHAs can detect only low-skill attacks because the biometrics collected are of short duration and low quality. All users are asked to perform the same action, and there are limited ways a user can sensibly interact with the challenges. This means that in order for a system to detect bots, it must pick up on very subtle cues.

Effective biometric analysis is possible, but it requires more data for a machine learning system to analyze than a simple puzzle or checkbox can provide. I believe the checkboxes and simple CAPTCHAs are used for the impression of security they give to customers and users. If users have no touchpoints with the security systems, then the systems that are working in the background become invisible.

They also give no ground truth signal when an attack outsmarts the system. With a system like this, you have to trust the system to detect attacks, even new ones that have never been seen before. By definition, the systems must categorize traffic that passes as “good” and traffic that does not as “bad.”

Static CAPTCHAs are not secure

While it’s easy to see how the checkbox and press-and-hold buttons are ineffective, the puzzle variant requires more explanation.

Puzzle-piece challenges such as the one pictured above, often are said to be superior. To a layman, these claims seem to have merit. They have random photos, and ask users for a variation on their inputs every round. But in order for it to be effective, the problem must be more difficult for attackers than humans to solve. The truth is, these challenges are automatable with computer vision technology developed decades ago. Text CAPTCHAs that have already been discarded by any serious company put up more defenses than this form of challenge.

There are multiple companies selling this solution, and there were free versions of this concept published up to four years ago.

The puzzle piece provided is a PNG image with transparency. Once an attacker has screenshotted the image and the PNG with transparency, all they must do is run a simple algorithm to find the edges of the image to find the position of the puzzle piece. This is easy because the puzzle pieces are never rotated and there is no effort to obscure the position of the puzzle slot. Once an attacker knows the location of the puzzle piece, they have all the information needed to move it.

This is just one approach, but there are many others that attackers deploy to work around these types of CAPTCHAs, such as:

- Use machine learning to turn puzzle backgrounds into an x-coordinate, after gathering a small number of samples.

- Use perceptual hashing to memorize the subset of differences and look-up the x-coordinate.

- Use an algorithm to find which placement of the puzzle piece is most contiguous.

If this wasn’t bad enough, the puzzle is not keyboard accessible, meaning users with physical disabilities are unable to access the service. It also requires fine motor control, something that is not easily accomplished with alternative input devices, such as trackballs.

The companies also offer easy-to-beat audio challenges, with no ability to armor the challenges. This is an anti-user approach that means disabled users with both hearing and motor impairments are unable to pass the challenge. An audio CAPTCHA does not solve every accessibility scenario, so it shouldn’t be considered a "one size fits all" solution.

The illusion of security

In summary, it’s difficult to see how such a system could offer anything other than the illusion of security.

In summary, static biometric CAPTCHAs:

- Only protect against naive attackers

- Create unnecessary friction for little benefit

- Don’t universally support every input device

- Have too little input and are too simple to do effective biometrics analysis

- Use the exact same design implemented by multiple vendors

- Are broken by off-the-shelf systems

Our conclusion: These types of CAPTCHAs give the impression of security at the cost of being annoying and compromising accessibility for good consumers. If these vendors are able to defend clients, it is not because of the use of these product features.

Why Arkose MatchKey is superior

At Arkose Labs, if we put challenges in front of users, there are legitimate reasons for doing so, based on the intelligence gained from our own challenge-response solution that sits on login and registration flows. It delivers dynamic response orchestration tailored to the attack pattern.

Our state-of-the-art series of challenges have never been done before, and they are the result of deep expertise in art, product, and technical engineering. And they are, in practical terms, unbeatable by bots or human-assisted bots. Here’s why:

- Arkose MatchKey has more than 200 challenge types developed in direct response to active attacks.

- Our challenges are tested against state-of-the-art machine learning. By iterating against ML, we understand exactly what it would take for an attacker to automate the challenge. Our strongest challenges:

- Produce more than 1,250 variations of a problem to solve per puzzle.

- Could result in more than a half-million individual images that an attacker would have to hand-label, which would take more than 25,000 human hours.

Our challenges are tested on humans, too, and they meet strict usability benchmarks. In fact, our standard-difficulty challenges have a minimal impact on good-user completion rates. Our challenges have full A11y accreditation. They are keyboard, touchpad, and trackball compatible, and our audio puzzles see much higher solve rates than other CAPTCHA audio.

If you’re weighing your options for enterprise-level defense and account security, you should know about the limitations of fake and ineffective CAPTCHA solutions. Book a meeting with Arkose Labs, and we’ll be happy to explain why our challenges are built specifically to stop automated CAPTCHA solving.