Overview

The Internet ecosystem is getting more and more complex, with the number of connected devices per individual continuously rising. In developed countries, it was estimated that each person owned on average 10 connected devices in 2020, a number that will likely increase to 15 by 2030. Each of these devices is very different, has various abilities, operating systems, and levels of sophistication.

With this, consumers have the choice between a multitude of web browsers or applications to access various services on the Internet. This variety and flexibility of accessing a service from a multitude of systems represents growing opportunities for attackers who constantly adjust their attack strategies to blend in so as not to be detected.

Web security systems need to adapt to this complexity and simple negative security detection systems that focus on discovering known bad signatures are ineffective in this environment. Sure, they may be able to catch some of the obvious bad traffic, but sometimes at the expense of legitimate users getting caught in the middle. More advanced intelligent detection systems need to include positive security detection methods to learn and recognize what is legitimate and achieve the best level of accuracy. Machine learning can help with bot detection. Machine learning is a bit of an amorphous term, so before we go any further, let’s take a minute and define it to reflect the context in which it is used at Arkose Labs: according to the Oxford Dictionary, “machine learning is the use and development of computer systems that are able to learn and adapt without following explicit instructions, by using algorithms and statistical models to analyze and draw inferences from patterns in data”.

Using machine learning for bot detection is complex and requires careful consideration. Just like any project, a single model cannot cover the whole attack surface. Various models that leverage various data points are needed to look at the traffic from different angles, the combination of which will improve detection accuracy and reduce attackers’ opportunity for success. Getting good accuracy with machine learning requires first clearly defining the problem to be solved, getting a good data source, labeling the data where possible, carefully selecting the necessary data points to fit the use case, and finally developing the model that will process the data and deliver the most accurate results. On top of that, the results of the system must be explainable. And finally, because one size does not fit all, it must be tunable so as to achieve the best accuracy across a multitude of websites and user base.

Neglecting any of the steps will result in detection inaccuracies. A single data point that is not well understood could result in reducing the overall accuracy and efficiency of the entire detection method. Choosing the right machine learning algorithm for bot detection requires running several experiments and in the end, the choice will depend on the accuracy and the cost of running the model. Good quality, labeled data is, unfortunately, hard to come by in the web security world, so assume that you'll have to come up with your own way of labeling the data and define your own source of truth. Arkose Labs does this by using machine learning throughout the product life cycle. Below are a few use cases where it is applied.

Learning the Internet Ecosystem

For efficient bot and fraud detection, Arkose Labs collects various device and browser characteristics from end-user devices using JavaScript and network information on the server-side. It’s important to select the most stable data points that best define the characteristics of a device. For example, the operating system (Windows, iOS, Linux), the platform type (MacIntel, win32) or the screen size are good examples of data points to use. However, data that relates more to the user preferences such as languages or timezone should be avoided in this case. The carefully selected data points are used to create a signature, which is then evaluated for each new session to detect fraudulent activity.

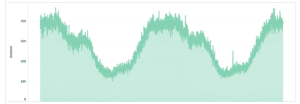

An unsupervised machine learning algorithm continuously evaluates the signatures collected from customer traffic to establish a ground truth of what “good Internet signatures” should look like. The learning is based on heuristics and statistical models that group several data points in a structured way so as to help recognize common signatures of different types of devices seen overtime throughout the customer base in various conditions. If a given signature is common enough, it will be added to the ground truth and by extension be considered legitimate. In this positive security model, any signature that is not added to the ground truth is technically considered suspicious, especially if seen at a higher than expected volume.

The system will re-evaluate and re-learn the ground truth several times a day in order to keep up with continuous changes that reflect users adopting new technology and software and making signatures that become less common over time gradually obsolete and invalid. The diagram below illustrates the process at the high level:

Finding Traffic Pattern Anomalies

The emergence of new patterns from one hour to the next at high volume is usually the sign of a volumetric attack starting up. A pattern here could be a JavaScript fingerprint as previously described, or traffic coming from a network (ISP or AS Number). Using machine learning to compare the traffic patterns between the last and previous hour of traffic can help reveal these anomalies. For example, if the top signatures for a given customer typically come from popular Android and iOS mobile devices and all of the sudden the trend shifts to a large portion of the traffic coming from Windows systems, this can be the sign of an attack. Bot mitigation systems with no challenge workflow have no option but to block once they flag such high-risk traffic. However, blocking the activity right away may be risky as sometimes commercial events have a tendency to cause these radical changes. But at least challenging the anomalous traffic is in order to further evaluate it.

In this negative security model, any anomaly found will be temporarily added to a black list and challenged. The challenge strategy will be dynamically adjusted based on how the user associated with the anomalous signature reacts to the challenge. The challenge complexity will be escalated if the user is unable to resolve the challenge properly and relaxed otherwise.

Traffic Prediction

A lot can be learned from looking at historical traffic patterns. Humans are creatures of habits and generally interact with a website during their daytime with a pick around mid-afternoon and a second, generally smaller, the time period after dinner before the traffic activity slows down during night hours.

During the weekend, commerce sites see a traffic increase while financial institutions see far less traffic compared to the weekdays.

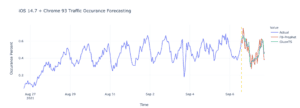

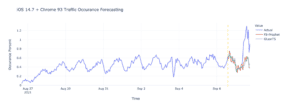

Observing historical traffic patterns can help predict future trends, which helps for capacity planning, but more importantly in the case of web security, helps to detect traffic anomalies, which may be the sign of volumetric attacks. Arkose Labs uses well-established ML algorithms such as Facebook Prophet or GluonTS to help predict traffic patterns. In the example below, the ML model is able to predict based on historical data (blue line) what the traffic should look like (red and green line) over the next 24h.

If however, the actual traffic pattern (blue line) is higher than the prediction, an alert will trigger for someone to investigate.

The traffic prediction can be coupled with an advanced detection method designed to detect abuse coming from legitimate devices. Considering the wide variety of device and software configurations used by Internet consumers, historical data may show that the expected traffic coming from Google Chrome v93 running on an OS X device (iPhone) should correspond to up to around 0.5% of the overall traffic. If however the ratio of such traffic increases by a factor of 2 or more like in the above example, this could be a strong indication of a volumetric attack leveraging a “good signatures”, an increasingly common strategy we see attackers adopting as they realize the fingerprint randomization techniques leads to invalid signature and becomes easy to detect. The excess of traffic seen on that particular signature will be challenged to mitigate the attack.

Challenge Hardening

Sessions that the Arkose Labs detection layer flags are typically challenged. The challenge-response is dynamically adapted to the threat detected: Higher risk sessions with a significant number of anomalies are challenged with the most complex and time-consuming puzzles, whereas lower-risk sessions with fewer anomalies are challenged with easier puzzles. Unlike other vendors, Arkose Labs design and develop its own 3D images, which are used within simple puzzles that challenged users to have to interact with. The puzzles are designed to be easy and fun for humans, with nearly 100% first-time pass rates, but difficult for machines.

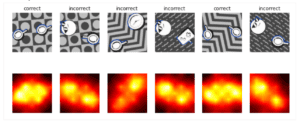

Attackers are constantly attempting to develop botnets fitted with computer vision in order to automatically resolve the puzzles. In order to stay ahead of this trend, Arkose Labs has designed a machine learning system used internally to test the strength and resiliency of each new puzzle produced by the technical artist team against attacks that leverage computer vision. The ensemble model consists of several state-of-the-art models that include Resnet50, Resnet152, Desnet, ResNext, and Wide Resnet. These models are pre-trained and able to recognize a large array of objects, animals, situations, and landscapes. Beyond giving us insight into the resilience of our puzzles, the process also helps us identify what objects, animals, or situations these models are not able to recognize by default and helps drive innovations in puzzle designs.

In the example below, the puzzle consists in selecting the image with two icons that are the same. After training the model with labeled data, it is able to recognize correct and incorrect answers. The saliency map (or heat map) shows which part of the image matters the most to make the determination of correctness. We use different size training sets in order to determine the minimum number of labeled images required to obtain a good level of accuracy.

Taking the problem one step further, we also use adversarial image techniques in order to generate new sets of images so as to make the attackers’ learning less effective and force them to constantly label and train their model, thus increasing their cost.

Conclusion

Those are a few examples where Arkose Labs currently uses machine learning for bot detection. It is deeply ingrained in our product and our strategy moving forward. Machine learning does not solve every problem and should be used wisely so as to get the best outcome. But it is definitely the right tool for solving some of the complex problems we are dealing with on a daily basis.